AI browsers are a hot mess of security risks

Excellent reporting by Max Zeff at TechCrunch (disclosure: my day job), who dives into the dangers and risks of AI-enabled web browsers:

Cybersecurity experts who spoke to TechCrunch say AI browser agents pose a larger risk to user privacy compared to traditional browsers. They say consumers should consider how much access they give web browsing AI agents, and whether the purported benefits outweigh the risks.

Without sufficient safeguards, [prompt injection] attacks can lead browser agents to unintentionally expose user data, such as their emails or logins, or take malicious actions on behalf of a user, such as making unintended purchases or social media posts. Prompt injection attacks are a phenomenon that has emerged in recent years alongside AI agents, and there’s not a clear solution to preventing them entirely.

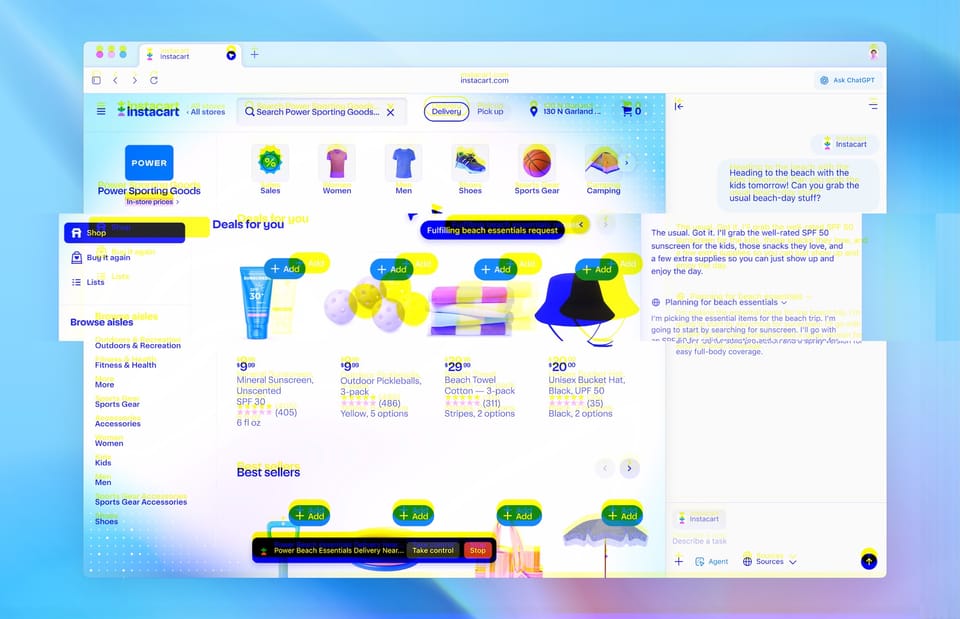

You've probably already seen some of these AI browsers flying around the web: Perplexity's Comet, Microsoft's new Copilot-infused Edge browser, and OpenAI now has its ChatGPT Atlas browser, to name a few, and there are plenty of others out there.

These AI browser makers claim that one of the main (ostensible) benefits of having AI baked into your browser is having the AI agent act autonomously on the user's behalf, such as clicking around on a web page, filling out forms — even booking a hotel — and other seemingly mundane tasks, like reading your open tabs so that the AI can summarize information for you (though, its accuracy may vary wildly). AI browsers don't just access everything that you see in your browser window, but they also request a dizzying amount of access to your email and online accounts, so that the AI can send you reminders and email your friends when you reserve a table at a restaurant — things that you obviously can't do yourself.

But adding AI to a web browser massively increases the attack surface that malicious hackers can use to steal your data from that browser — from your recent internet history to your saved passwords and credit card numbers — and potentially beyond, including the private data saved on your computer or phone.

Historically, to compromise or steal data from ordinary web browsers like Chrome, Firefox, or Safari (for example), someone would need to exploit a vulnerability in the browser itself. These vulnerabilities typically aren't easy to find, and the ones that are discovered can sell for millions of dollars.

But now with AI baked in, it's now possible to exploit AI browsers by simply asking the browser for the user's data by way of a prompt injection attack.

Prompt injection attacks are a class of security vulnerability that can be used to trick AI agents, such as those embedded in your browser, into performing actions that the agent is not supposed to. Malicious hackers can hide text-based instructions in the source code of a webpage, or embedded as an image in an email, which might not be visible by the human eye but can still be read and understood by the AI agent when it's loaded in your browser. This type of attack can trick an AI browser into spitting out the user's data to a remote server controlled by the attacker.

These prompt injection attacks are capable of stealing private data stored in the browser, or performing malicious tasks as if the legitimate user had themselves requested, like accessing one of your online accounts. Security researchers have already found several security bugs in Perplexity's Comet browser since it rolled out in July. Soon after OpenAI debuted its ChatGPT Atlas browser, security researcher Bob Rudis (aka @hrbrmstr) found that the OpenAI-made browser was also vulnerable to prompt injection.

These security issues are here, right now, and exploitable in ways that haven't been possible for traditional software, and something that has been acknowledged by the very people creating these AI browsers. OpenAI's CISO says prompt injections are an "unsolved security problem," and browser maker Brave said prompt injections are a "systemic challenge facing the entire category of AI-powered browsers."

But for the benefits that AI browsers claim, the security and privacy tradeoffs just aren't worth it.

Even from a practical point of view, are we ready to give over complete control of your browser (and everything inside it) to an AI company for the sake of filling out some extremely mundane online web form, like booking a hotel? Something so important to your upcoming travel plans that frankly, wouldn't you want to make sure every detail is accurate and not riddled with errors or mistakes, exactly the sort of thing AI is prone to?

Baking in a product known to have significant security defects or weaknesses that can be exploited with ease and over-the-internet is an incredibly bad idea. These products aren't ready for primetime yet — and not even close. These new products might be dressed up as if they're the future of technology, but should really come with enormous warning labels: "Use at your own risk."

Thank you so much for reading ~this week in security~. Please consider a paying subscription. Feel free to reach out with any feedback, questions, or comments about this article: this@weekinsecurity.com.